Study Reveals Vulnerabilities in Meta’s AI Safety System

Meta’s PromptGuard: Vulnerabilities Uncovered

Meta, known for its advancements in artificial intelligence, introduced an AI safety system named PromptGuard-86M. However, recent research has unveiled significant vulnerabilities within this model.

The Vulnerability of PromptGuard

According to reports from SC Media and findings from Robust Intelligence, attackers have found a way to bypass the defenses of PromptGuard using a technique known as prompt injection. This method involves manipulating input prompts by altering their format, such as removing punctuation and spacing out letters.

As a result of this manipulation, the detection ability of PromptGuard for prompt injection attacks dropped dramatically—from an exemplary 100% efficiency to a mere 0.2%. This noticeable decrease indicates a critical weakness in the model’s design and its ability to handle seemingly simple attacks.

How the Bypass Works

The bypass method relies on altering the structure of the prompt to confuse the AI system. Here’s a breakdown of how this is accomplished:

- Removing Punctuation: By stripping away punctuation, the malicious prompt becomes less recognizable to the system.

- Spacing Out Letters: Altering the spacing between characters further obfuscates the intent of the prompt.

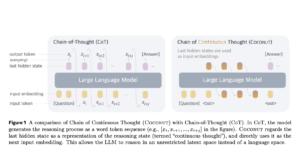

These simple changes exploited a shortcoming in the PromptGuard model, which is built on Microsoft’s mDeBERTa text processing model. The findings reveal that the system did not identify a significant increase in Mean Absolute Errors for individual letters from the English alphabet. This lack of recognition suggests that the model has not been fine-tuned to handle threats posed by manipulated prompts.

Implications for AI Security Strategies

The discovery of this vulnerability raises alarm for organizations considering the adoption of PromptGuard as part of their AI security measures. It highlights an essential lesson in the field of AI: security tools must be rigorously assessed and continuously updated to address new and evolving threats.

Aman Priyanshu, a researcher specializing in AI security at Robust Intelligence, emphasized the need for a multi-layered security approach in the face of such vulnerabilities. Relying solely on a single defense mechanism like PromptGuard may not suffice; organizations must incorporate multiple layers of security to ensure comprehensive protection against potential attacks.

Moving Forward with AI Security

In light of these findings, companies should consider several strategies to enhance their AI security frameworks:

- Continuous Monitoring: Regularly assess the effectiveness of AI tools and keep abreast of newly discovered vulnerabilities and attack methods.

- Layered Security Systems: Implement a combination of security measures that work together to provide robust defense against various types of threats.

- Tailored Solutions: Adapt AI models and security tools to address specific threats relevant to an organization’s unique context and use cases.

Understanding the dynamic nature of cybersecurity threats is paramount for any organization employing AI technology. As companies increasingly depend on AI for various functions, ensuring the integrity of these systems becomes a critical priority.

By recognizing vulnerabilities and adjusting security strategies accordingly, organizations can build more resilient systems that withstand potential cyber threats.