Top 3 Exciting New Features of Meta’s Llama 4 AI Models

Mixture of Experts (MoE) Architecture

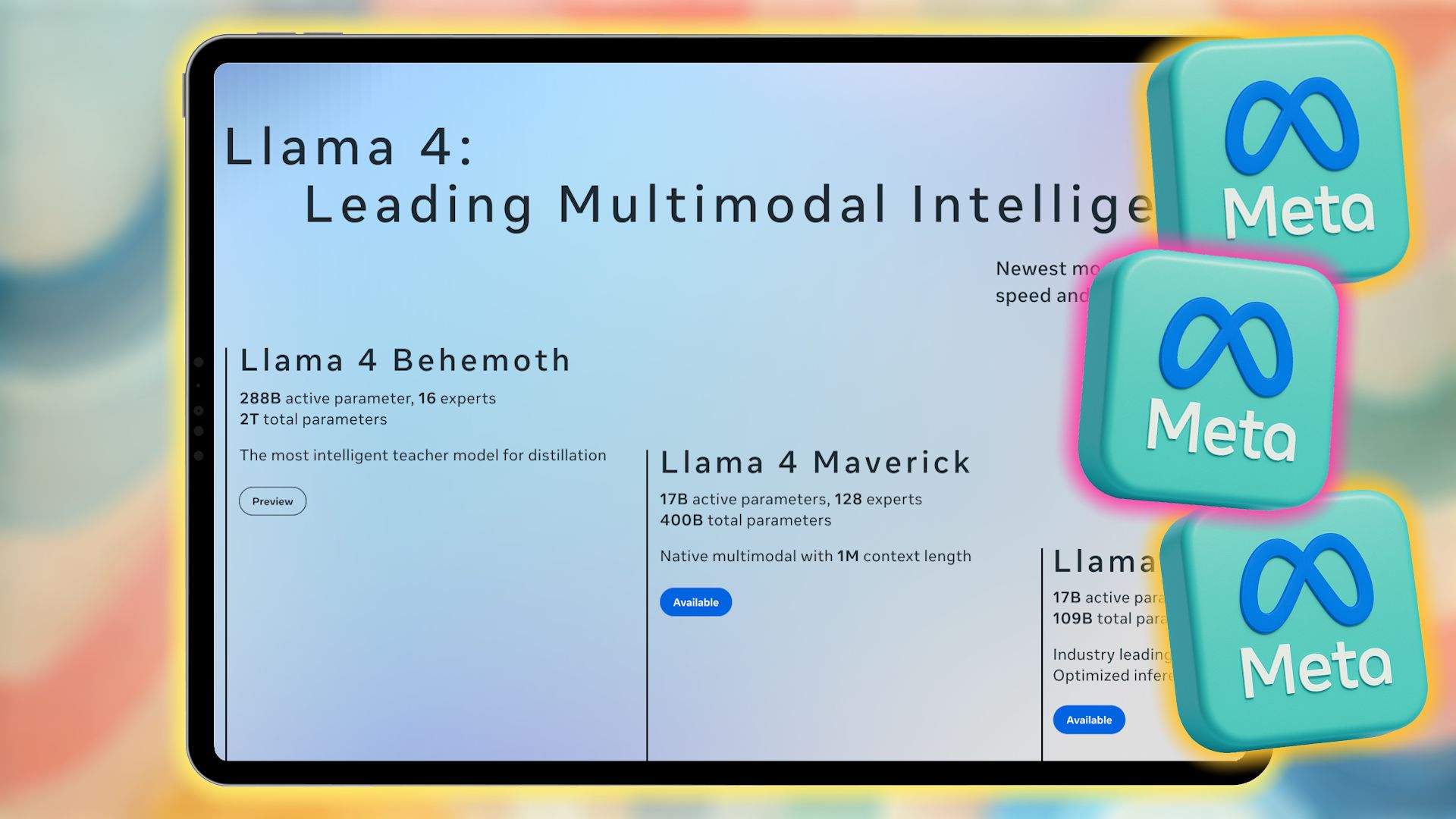

In early April 2025, Meta introduced the Llama 4 series, which features significant upgrades aimed at enhancing artificial intelligence performance. A standout feature within this series is the Mixture of Experts (MoE) architecture. This innovative approach is new to the Llama line and diverges from the traditional dense transformer models used in previous versions.

Under this new architecture, only a selected number of parameters are activated for each token. For instance, in Llama 4 Maverick, only 17 billion of the 400 billion total parameters are active at any one time. This model employs 128 routed experts in conjunction with a shared expert. On the other hand, Llama 4 Scout, the smallest model, has 109 billion parameters but activates just 17 billion using 16 experts.

The largest variant, Llama 4 Behemoth, activates 288 billion parameters out of nearly two trillion total parameters by utilizing 16 experts, which efficiently allocates relevant resources. This new architecture not only enhances computational efficiency during training and inference but also cuts down on operational costs and reduces latency. Meta claims that thanks to the MoE architecture, Llama 4 can function on a single Nvidia H100 GPU, a commendable feat considering the model’s extensive parameters, especially when comparing it to other models like ChatGPT that often require several GPUs for similar tasks.

Native Multimodal Processing Capabilities

Another vital enhancement in the Llama 4 models is their native ability to process multiple modalities simultaneously. This means that they can understand both text and images at the same time. The models achieve this functionality through a fusion of text and vision tokens during their initial training phase, allowing them to integrate diverse data types cohesively.

Previously, with the Llama 3.2 upgrade, Meta released a variety of models, including multimodal vision and text models. Now, with the advancements in Llama 4, there is no longer a need for separate models for text and visual processing. The enhanced vision encoder within Llama 4 supports complicated visual reasoning tasks and the processing of multiple images at once. This broadens the applications of the Llama 4 models, making them suitable for various advanced tasks that demand sophisticated text and image comprehension.

Industry-Leading Context Window

Llama 4’s AI models feature an extensive context window of up to 10 million tokens, a landmark achievement in the industry. While Llama 4 Behemoth is still being trained, Llama 4 Scout has set a record with a maximum context length that allows over five million words to be input simultaneously.

This new capability marks a significant upgrade from Llama 3’s previous 8K tokens and the subsequent increase to 128K with the Llama 3.2 update. Even Llama 4 Maverick, which supports a one-million token context length, exemplifies impressive advancements in AI technology.

Compared to its predecessors, including even the top-performing Gemini models with their two-million token limits, Llama 4’s expansive context length puts it ahead in the competition. This substantial context window allows the Llama 4 models to excel in tasks that require the analysis of vast amounts of information, such as conducting multi-document reviews or deep-dive analysis of large codebases.

Extended conversations are also within Llama 4’s capabilities, offering a more dynamic interaction model. Notably, while previous Llama models, along with models from other companies, had limitations in engaging in lengthy discussions, the new context window empowers Llama 4 to perform extensively in this area.

The Llama 4 series not only ranks high for reasoning abilities, thanks to its new context window, but it also prioritizes efficiency through its innovative MoE architecture in both training and application. This combination of features positions Llama 4 as a powerful, versatile AI model with capabilities that potentially outstrip its competitors in areas like reasoning and coding.