The In-depth Research Challenge — Benedict Evans

Understanding the Challenges of Large Language Models (LLMs)

Large Language Models (LLMs) are fascinating tools that can process and generate human-like text. However, there are inherent challenges and limitations in how they retrieve and present information. This article explores some of these challenges, particularly focusing on the reliability of data and expectations from LLMs.

Fluctuations in Data

When considering the accuracy of the installed base of mobile operating systems, for instance, different sources can vary significantly. A report from a Japanese regulatory body indicates that around 53% of devices use Android while 47% use iOS. However, reports from companies like Kantar can fluctuate by as much as 20 percentage points month-to-month, raising concerns about what they are truly measuring. This inconsistency makes it difficult to rely on just one source for accurate data.

Types of Questions and Answers

Asking LLMs for information can be tricky. For example, if someone queries about the adoption rate of mobile operating systems, what specific data points are they seeking? There are several interpretations:

- Unit sales: How many units were sold?

- Installed base: How many devices are currently in use?

- Usage share: How often do users engage with each platform?

- Spending share: How much do users spend on apps for each platform?

Each of these metrics can lead to different data sources and interpretations, complicating the retrieval of straightforward answers.

The Nature of LLM Queries

LLMs do not function like traditional databases. They often tackle probabilistic questions rather than deterministic ones. A deterministic answer is a specific, fixed answer based on a clear query. LLMs, on the other hand, are designed to interpret the likely intent behind a question. This distinction leads to a mismatch; users may desire precise data while LLMs compete with probabilistic guesswork. The result can be misleading, where users receive an answer that does not accurately reflect the question posed.

Reliability and Progress

While it is common for developers to assert that their models are continually improving, this can be misleading. Incremental improvements may not address fundamental issues with accuracy. If the model is only 85% accurate today, a slight improvement doesn’t significantly enhance trustworthiness. Many users might hesitate to rely on LLM outputs when there is uncertainty about their correctness.

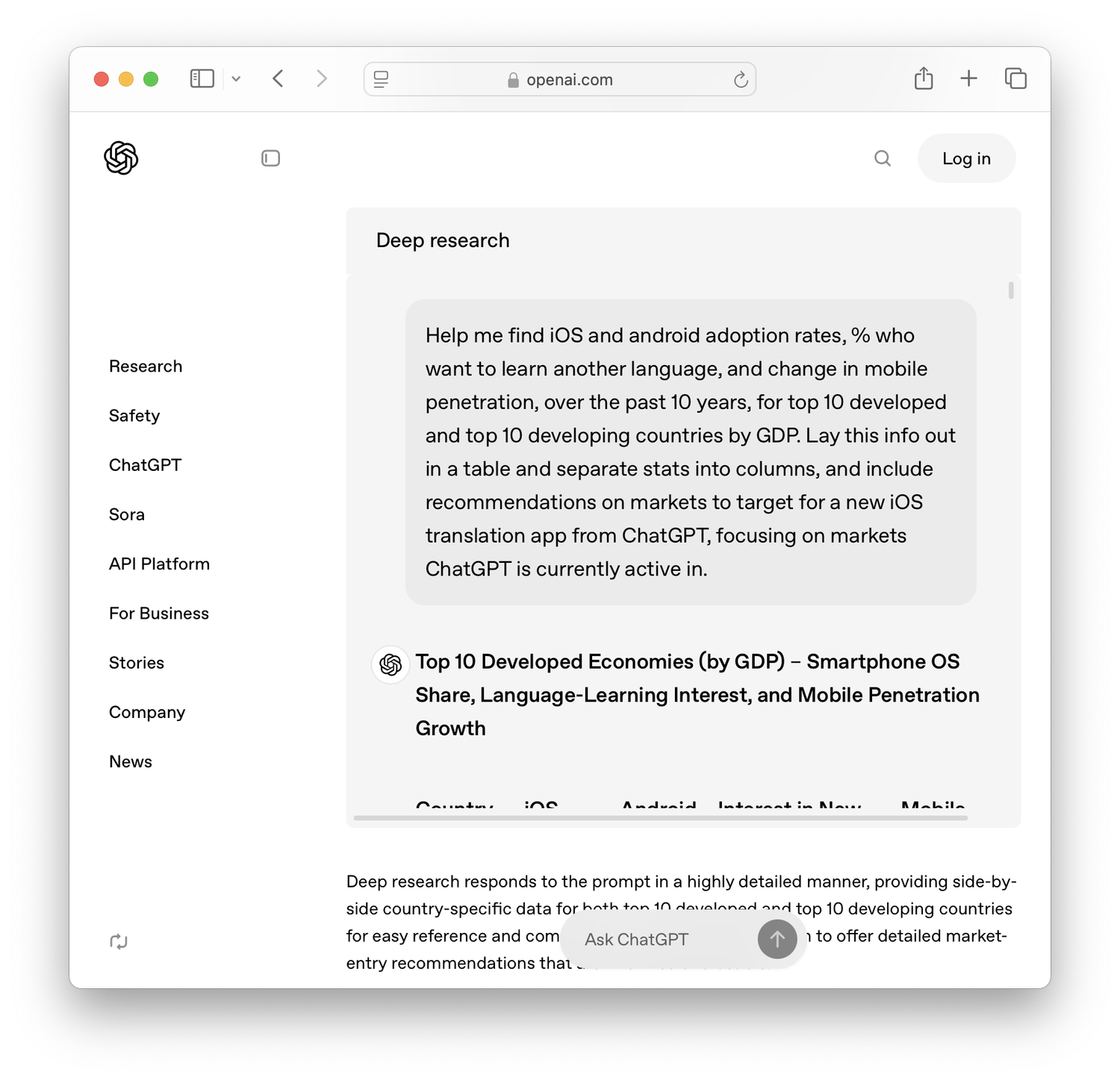

Applications and Limitations

Despite their shortcomings, LLMs have proven to be useful in specific contexts. They can streamline the process of generating reports or summarizing vast amounts of information quickly. For instance, if a user needs a comprehensive report and has expertise on the topic, an LLM can transform days of work into mere hours. However, users must ensure they review and amend the output carefully.

The Bigger Picture

Two critical questions remain regarding the evolution of LLM technology:

Error Rate: Will the error rate decrease in the future? Understanding this will shape how we build applications around these models. Depending on the answer, developers may need to create systems that expect inaccuracies and require human oversight.

- Market Position: Currently, companies that develop LLM technology do not establish a strong market position based on product uniqueness. Their offerings often revolve around providing APIs for others to build upon. This raises questions about the sustainability and future of these systems.

In summary, while LLMs represent a significant technological advancement, they come with specific challenges that affect their trustworthiness and usability. These models can assist in various tasks, but careful consideration is needed when interpreting their output.