Gemini Robotics: Ushering in a New Era of AI-Driven Robots

Introduction

In March 2025, Google DeepMind launched Gemini Robotics, an innovative technology that is set to change how robots function in industrial and home settings. Historically, robots in factories have focused primarily on performing tasks efficiently, executing jobs swiftly and with precision, similar to the mechanical parts of a vehicle. These robots typically operate in stable environments, lacking the ability to adjust to changes, such as an individual crossing their path. Consequently, they are usually enclosed within safety cages to avert accidents.

Gemini Robotics is shifting this norm by employing advanced artificial intelligence (AI). This technology enables robots to sense, adapt, and interact fluidly with their environment, making them safer and more functional in real-world scenarios.

The landscape of work is evolving. In sectors like automotive manufacturing, vehicle design cycles are getting shorter, necessitating swiftly adaptable production lines. This shift makes specialized machines increasingly less viable long-term.

Complications also arise when robots need to work alongside other devices. With traditional methods relying on fixed task sequences, efficiency and coordination can suffer.

Not all tasks are suited for full automation due to cost or the requirement for flexibility. This is where Cobots—Collaborative Robots—come into play. These robots are built to work in tandem with humans rather than operate alone, which poses challenges in ensuring safety. Cobots must detect collisions with people or other machines and must be able to modify their movements in real time. For instance, a Cobot may slow down if a person approaches too closely, drastically lowering the chance of accidental impact.

How Gemini Robotics Differs from Previous Approaches

Google DeepMind is leveraging its advanced AI models, particularly Gemini 2.0, to enable robots to better comprehend the physical world. The ambition is to create generalist robots capable of executing a variety of tasks from the same programming while ensuring user safety in ever-changing environments.

Testing has shown Gemini Robotics’ effectiveness in a range of tasks, allowing it to address challenges it had not encountered during its training phase. For example, while older models trained solely for specific tasks like stacking blocks would struggle with new commands, Gemini, through its comprehensive reasoning skills, can adapt quickly to diverse instructions. In performance metrics, it has successfully surpassed previous models by adapting to new conditions.

A notable advantage is its real-time interactivity. Gemini operates on a sophisticated language model that comprehends everyday speech and can adjust tasks immediately based on user instructions. If interrupted, it can quickly reassess and follow new directions, maintaining safety and efficiency.

Unlike previous robotic systems that became rigid once a task commenced, often struggling with unexpected changes, Gemini’s AI continually re-evaluates its plans, mirroring human-like adaptability, which strengthens its practical utility.

The Secret Under the Hood

Recent progress in AI models has enabled them to process multiple input types and respond accordingly. Google DeepMind built its new model, Gemini 2.0, on prior developments, facilitating various data types—text, visuals, audio, and video processing—while also generating action outputs for robotic implementation. This Vision-Language-Action (VLA) model forms the backbone of robotics, allowing them to interpret complex scenarios and execute tasks in human environments.

A key innovation in this framework is an intermediate reasoning layer that assesses safety and ensures actions are executed based on real-time evaluations. This design allows for continuous adjustment of outputs, granting robots the capability to respond instantly to their surroundings.

Gemini Robotics Highlights

According to Google DeepMind, three core features define Gemini Robotics advancements: generality, interactivity, and dexterity.

Generality: Adapting to the Unexpected

Generality means a robot can adjust to new challenging situations. By utilizing the substantial world knowledge in the Gemini model, Gemini Robotics adeptly handles various objects, instructions, and environments. This capability allows robots to move beyond strict, programmed tasks to function effectively in a fast-evolving world. Google reported that Gemini Robotics more than doubled its benchmark performance compared to other leading models.

Interactivity: Understanding and Responding Naturally

Interactivity speaks to the robot’s ability to interpret and react to commands effectively. With its advanced language processing, Gemini can respond intuitively to natural language instructions, adapt seamlessly to sudden changes in tasks, and continue functioning without needing explicit reprogramming. This responsiveness is vital for efficient operation in human environments.

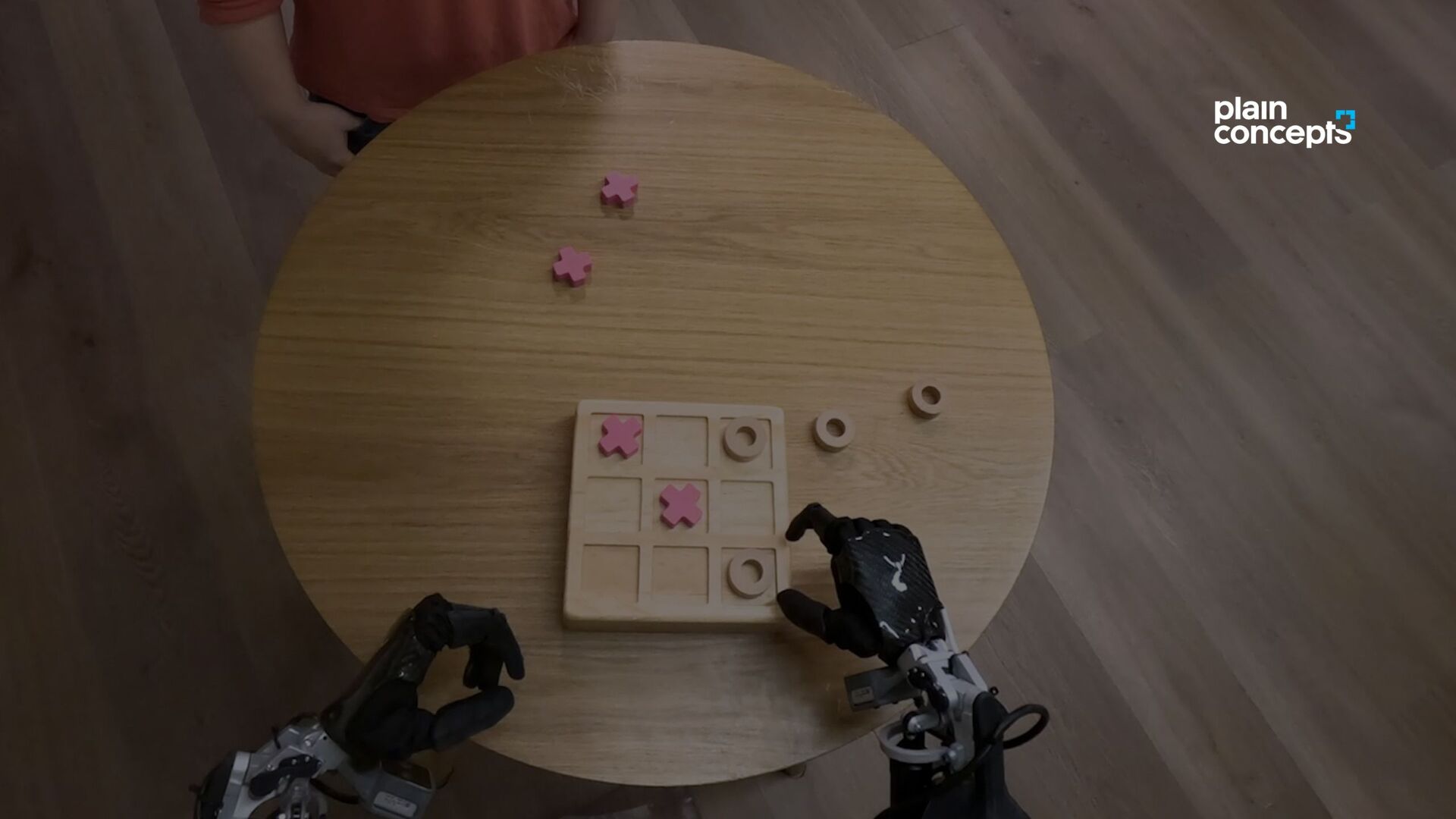

Dexterity: Mastering Fine Motor Skills

Dexterity relates to the robot’s skill in handling intricate tasks requiring finesse. Gemini Robotics has made significant strides, enabling robots to perform activities such as folding origami, preparing meals, or assembling complex items. The focus on dexterity broadens possibilities for robots to assist in more subtle, human-centered tasks, traditionally beyond their reach.

Gemini Robotics Model Family

Google DeepMind has introduced two specific AI models within the Gemini Robotics framework:

- Gemini Robotics: This serves as the general AI model for robotics, expanding upon DeepMind’s Gemini 2.0 foundation to include robotic control.

- Gemini Robotics-ER: This specific model addresses embodied reasoning, enhancing awareness of space and interactions with objects.

Business Adoption

The capabilities offered through Gemini Robotics pave the way for numerous applications across various sectors. These range from building general-purpose robots to next-gen humanoid robots aimed at assisting in different environments.

Notable partnerships include one with Apptronik to integrate Gemini Robotics into their Apollo humanoid robot for logistics purposes. This partnership shows real-world applications of Gemini Robotics in the enhancement of humanoid robots.

Moreover, vendors like Agile Robots and Boston Dynamics are currently evaluating the Gemini Robotics-ER model, portraying a strong industry interest in the technology’s potential for practical use.

These collaborative ventures are crucial for translating advanced AI research into functional solutions and provide ongoing feedback to enhance the technology further.