AMD Foresees Mobile and Laptop Inference as the Future, Sees Potential to Compete with NVIDIA’s AI Leadership

The Shift to Edge AI Devices: AMD’s Perspective

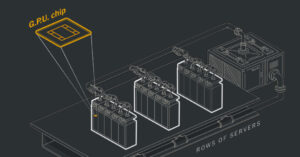

Advanced Micro Devices (AMD) is making a significant move in the world of artificial intelligence (AI), especially regarding where inference processes will take place. AMD’s Chief Technology Officer, Mark Papermaster, has stated that inference, traditionally handled in data centers, is becoming outdated. Instead, the focus is shifting towards consumer-oriented devices like smartphones and laptops.

Understanding Inference vs. Training

Initially, the tech industry was caught up in the excitement surrounding AI model training. Companies invested heavily in resources to train large language models (LLMs). However, the industry appears to be transitioning towards inference, which is the process of applying these models to execute specific tasks. Papermaster asserts that this shift toward inference is pivotal and that AMD is poised to lead the way in this new landscape.

A Major Shift Towards Edge AI

During a recent interview with Business Insider, Papermaster shared his insights on the future of inference. He indicated that, over time, a significant portion of inference processing will occur on “edge devices,” which are smaller computing devices closer to the end user. “We’re just seeing the tip of the spear now, but I think this moves rapidly,” he remarked, emphasizing that the actual timeline will depend on the development of applications that can utilize AI technology effectively on these devices.

Reasons Behind the Shift

There are several factors driving this shift towards edge AI:

- Cost Efficiency: The rising operational costs of AI computation in data centers are pushing major tech companies, such as Microsoft, Google, and Meta, to explore alternatives.

- User Preference: Consumers gravitate towards devices that offer instant, localized AI capabilities, enhancing their overall experience.

- Advancements in Technology: Innovations in AI model design, such as those introduced with AMD’s DeepSeek technology, focus on optimizing performance and enhancing the efficiency of AI models.

AMD’s Commitment to AI Innovation

AMD’s investments in AI technology are manifesting through their recent product releases. Their latest APU (Accelerated Processing Unit) lineups, including models like Strix Point and Strix Halo, emphasize integrating AI computational power into compact devices while remaining cost-effective. This demonstrates AMD’s commitment to the evolving demands of AI and edge computing.

The Future of AI Devices

Papermaster envisions a future where consumer devices can execute advanced AI models locally. This would grant users the full benefits of AI technology, enhancing functionality and user experience. He also highlights that the competition in AI will increasingly revolve around inference capabilities rather than model training, where NVIDIA has traditionally held a significant edge.

In essence, AMD’s approach and initiatives suggest a significant evolution in how AI is harnessed. As they aim to disturb NVIDIA’s dominance by focusing on edge devices and localized inference, it is clear that the conversation around AI is shifting.