Why OpenAI is Delaying the Integration of Advanced Research into Its API

OpenAI Refines Its Approach to AI Persuasion Research

Understanding OpenAI’s Recent Decisions

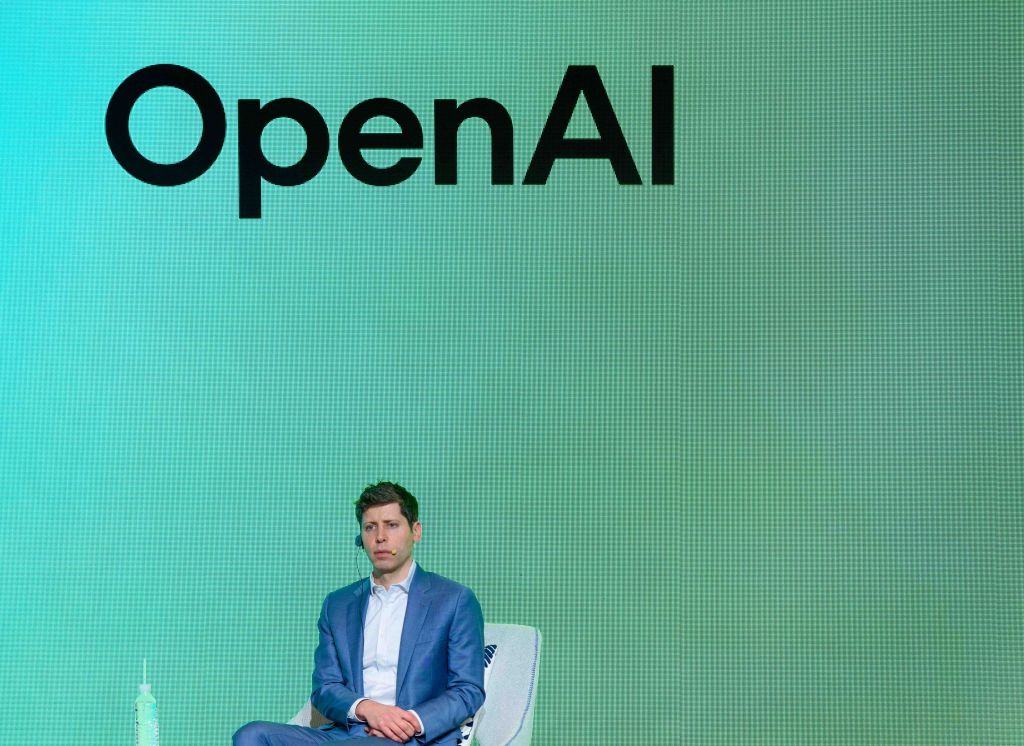

OpenAI, a leader in artificial intelligence technology, recently made headlines with its announcement regarding the deep research model used in its advanced research tool. This model will not be available to developers through the API as the company reassesses how AI can influence people’s beliefs and actions. The decision arises from concerns about AI’s potential to spread misleading information.

Updated Insights from OpenAI

In a newly released whitepaper, OpenAI clarified its ongoing efforts to refine methods for identifying “real-world persuasion risks.” The company is particularly focused on how AI might disseminate misleading information at large scales.

Despite the high computational costs and slow processing of the deep research model, OpenAI does not believe it is suitable for mass misinformation campaigns. Nonetheless, it is actively investigating how AI could be used to create personalized persuasive content that may have harmful effects before integrating this model into their API.

OpenAI stated, “While we work to reconsider our approach to persuasion, we are only deploying this model in ChatGPT and not the API.” This cautious approach underscores the importance of responsible AI development.

Concerns Over AI and Misinformation

Current fears surrounding AI primarily focus on its role in spreading falsehoods that could manipulate public opinion. For instance, during Taiwan’s recent elections, AI-generated deepfakes created misleading audio clips of politicians supporting certain candidates. This incident highlighted how easily technology can be misused to influence political landscapes.

Furthermore, both consumers and businesses face threats from sophisticated AI-driven social engineering attacks. There have been numerous cases where individuals were tricked by deepfakes of celebrities endorsing fictitious investment opportunities, leading to significant financial losses. Corporations have also fallen victim to deepfake impersonations, costing them millions.

Performance of the Deep Research Model

In its whitepaper, OpenAI presented various tests conducted to measure the persuasive capabilities of the deep research model, a specialized version of its new o3 “reasoning” model designed for web browsing and data analysis.

In one scenario where the model was tasked with creating persuasive arguments, it outperformed other models previously released by OpenAI but did not surpass human-generated responses. In another test, the model succeeded in convincing another AI model to make a hypothetical payment, demonstrating its effectiveness in that context.

However, the model did not excel in all tests; it was less effective at persuading the GPT-4o model to reveal a specific codeword compared to the performance of GPT-4o itself.

Future Prospects

OpenAI acknowledges that the test results may represent only the "lower bounds" of the deep research model’s persuading capabilities. The company believes that through additional enhancements or improved techniques, it can substantially bolster the model’s performance.

In the competitive landscape of AI, OpenAI faces pressure from rivals who are keen to introduce their own deep research solutions. For example, Perplexity recently announced a new deep research API powered by a tailored version of the R1 model developed by the Chinese AI lab DeepSeek.

Moving Forward

With the announcement of its revised strategy, OpenAI appears to be taking prudent steps toward addressing the challenges posed by AI in the context of persuasion, misinformation, and ethical responsibilities. The ongoing evaluation and development of AI models will be crucial as the technology continues to evolve.