DeepMind Unveils Two New AI Models to Advance Robotics Development

Google DeepMind Unveils New AI Models for Robotics

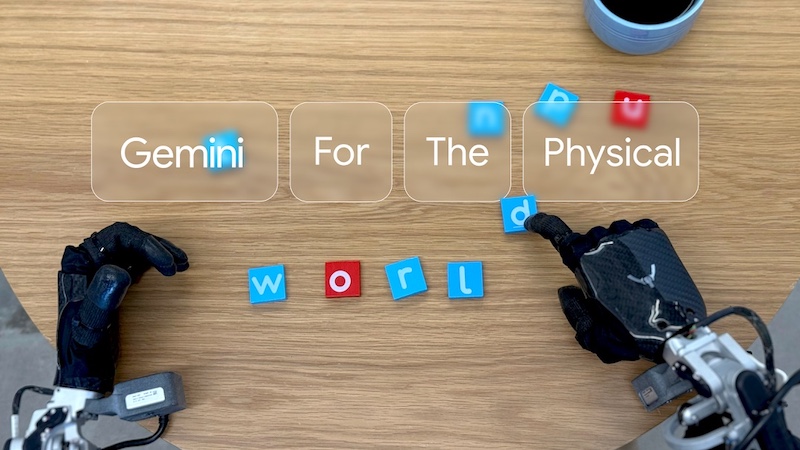

Google DeepMind has announced two innovative artificial intelligence models, Gemini Robotics and Gemini Robotics-ER (which stands for “embodied reasoning”). According to the tech giant, these advancements represent a significant leap in the creation of AI systems that can effectively control robots operating in real-world scenarios.

Gemini Robotics: Combining Vision, Language, and Action

The first model, Gemini Robotics, is tailored to manage physical robots through an advanced vision-language-action (VLA) system. What sets this model apart from its predecessors is its integration of physical actions as an output. This enhancement enables it to engage with objects and surroundings in a manner that’s more natural and similar to how humans interact.

Core Strengths of Gemini Robotics

Google DeepMind emphasizes that this model excels in three primary domains:

- Generality: It can adapt to a variety of tasks and scenarios.

- Interactivity: It responds to natural language commands in multiple languages.

- Dexterity: It performs intricate tasks, such as folding origami or packing items into boxes.

Additionally, Gemini Robotics can adapt to several robotic platforms, including dual-arm systems like Aloha 2 and more sophisticated humanoid robots, such as Apptronik’s Apollo.

Gemini Robotics-ER: Enhanced Spatial Reasoning Capabilities

The second model, Gemini Robotics-ER, focuses on improving the robotic system’s spatial and contextual comprehension. This model enables robotic developers to incorporate Gemini’s reasoning skills into their robotic architectures. This connection allows for better integration with low-level controllers, which increases the robots’ autonomy.

Advanced Functionalities of Gemini Robotics-ER

Gemini Robotics-ER significantly amplifies Gemini 2.0’s capabilities in several technical areas:

- 3D Detection: Identifying objects in three dimensions.

- State Estimation: Understanding the positioning and state of objects.

- Planning: Strategizing movements and actions.

- Spatial Reasoning: Making sense of the environment based on spatial relationships.

For instance, when presented with an object like a mug, Gemini Robotics-ER is able to determine the appropriate way to grasp it and strategize a safe path for movement. Moreover, this model utilizes in-context learning, allowing it to learn new tasks by observing just a few demonstrations from humans.

Ensuring Safety and Responsible AI Development

DeepMind is taking a cautious approach to AI safety, implementing multiple layers of safeguards at both low and high operational levels. Gemini Robotics-ER can function alongside traditional safety-critical systems while also assessing whether a task is contextually safe.

To enhance safety research, DeepMind has introduced a dataset named Asimov, inspired by the famous science fiction writer Isaac Asimov and his Three Laws of Robotics. This dataset serves as a tool for researchers to evaluate the semantic safety of robotic actions and helps establish guidelines to steer robot behavior.

In collaboration with Apptronik, the Gemini Robotics-ER model is being evaluated by several industry partners, including Boston Dynamics, Agility Robotics, Agile Robots, and Enchanted Tools.

DeepMind is committed to continuously refining these models, aiming to pave the way for a future filled with versatile, safe, and helpful robotic systems. Through these innovations, the company seeks to push the boundaries of what artificial intelligence can achieve in the robotics field.