Did Meta Attempt to Regulate AI-generated Images in 2024?

Understanding AI Generated Content and Its Impact on Social Media

The Rise of AI and Social Media Platforms

Social media platforms like Facebook have become popular for enthusiasts to connect with their favorite musicians and artists. For instance, many users turn to social media to get updates from bands like Nick Cave and the Bad Seeds. A recent incident involving an Australian teacher named "Jake" illustrates a serious potential issue on these platforms. After watching a Facebook video where Cave promoted a seemingly lucrative investment opportunity, Jake felt inspired to follow the advice shared. Unfortunately for him, the video was not genuine, and it led to a significant loss of AUD 130,000 due to a scam heavily utilizing AI technology.

AI-Driven Scams

AI has become a tool for various scams, with scammers impersonating celebrities to build trust and trick users. In Jake’s case, he fell victim to a con that used deepfake technology to create a misleading advertisement. As Meta, Facebook’s parent company, continues to make adjustments to its policies regarding AI-generated content, it’s crucial to be aware of the implications behind these developments.

Meta’s Efforts to Regulate AI Content

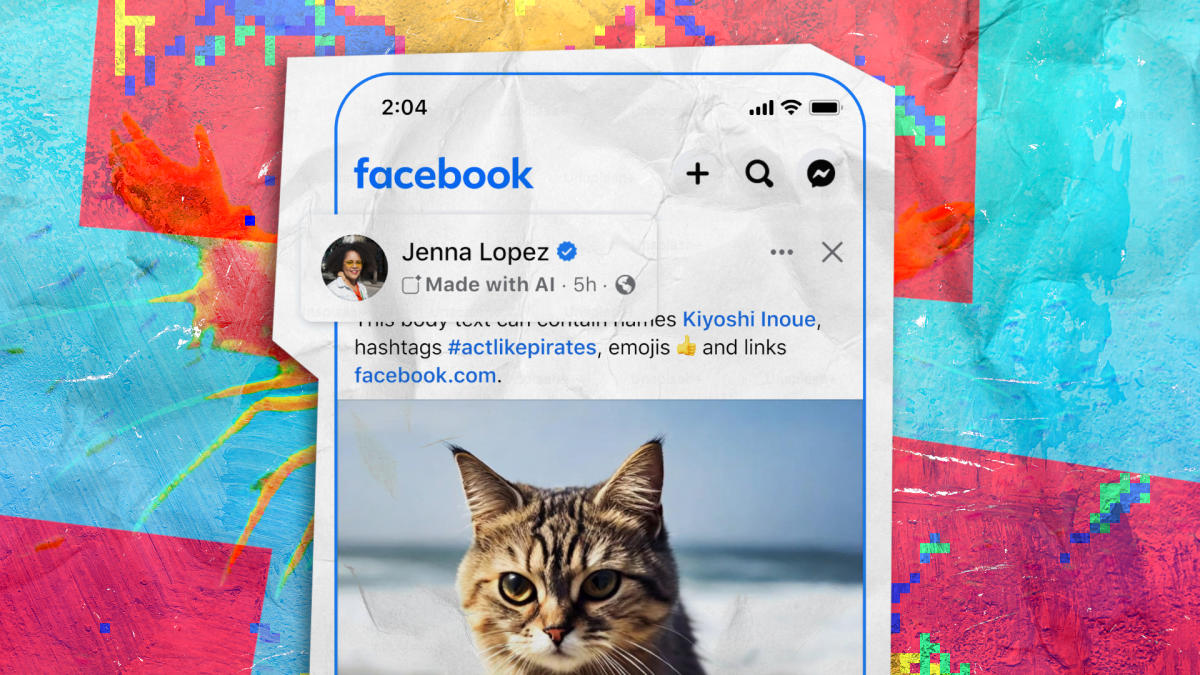

In February 2024, Meta introduced a labeling system to help users identify content generated or altered by AI. This move aimed to combat deception and protect users. Below are some significant changes that were made to their policy throughout the year:

Expanded Labeling System: Initially announced in February, Meta’s labeling brought attention to images and videos containing AI content, regardless of whether they were entirely AI-generated or merely modified.

- Policy Adjustments: Over time, Meta refined its approach to content labeling. For example:

- In April, they ceased the removal of AI-manipulated media that did not breach community standards and broadened the label’s applicability to include videos, audio, and pictures.

- By May, they emphasized that only organic content (user-generated media) would be labeled.

- In July, the label changed from "Made with AI" to "AI info," making it more inclusive but also more ambiguous regarding how AI tools were used.

- In September, Meta decided to relocate AI labels to the post menu if the content was modified rather than created by AI, thus placing the responsibility on users to label their uploads properly.

Failure to comply with these labeling requirements could lead to penalties, especially for accounts that rely on monetization through the platform, risking a “shadow ban.”

Effectiveness of AI Content Labeling

Stickers and labels on AI-generated content have been hailed as a positive step in enhancing user awareness, even as confusion persists surrounding AI terminology and what those labels mean. Research has shown that not all labeling phrasing is equally effective, indicating that user understanding could vary significantly.

Labeling AI-generated content can help mitigate risks posed by deepfakes and other manipulative practices. Yet, the real challenge remains whether these measures can significantly reduce the spread of misleading content on social media.

Fear of Scams Persist

Recent investigations have highlighted continuing issues with AI-generated media being used in scams. Stories from news stations reveal con artists using AI-created images of soldiers to lure victims into romance scams, resulting in heavy financial losses.

Despite Meta’s ongoing efforts to enhance its AI labeling tools and develop new detection processes, users must remain vigilant. As AI technologies advance, so do the tactics of bad actors, making it crucial for users to stay informed and cautious.

Social media platforms are at the forefront of these emerging technologies, illuminating both their potential and their pitfalls. With changing policies and innovative labeling systems, the aim is not only to protect users but also to foster a safer online environment as AI continues to evolve.