Google Introduces Physical Form to Gemini Through New AI Robotics Initiative

Key Points

- Google has launched Gemini Robotics and Gemini Robotics-ER to enhance AI’s physical interactions.

- Project Astra improves AI assistants’ ability to recognize and interact with real-world objects instantly.

- CEO Sundar Pichai emphasizes the potential of Gemini Robotics to help robots adapt to their environments.

Introduction to Google’s Gemini Robotics

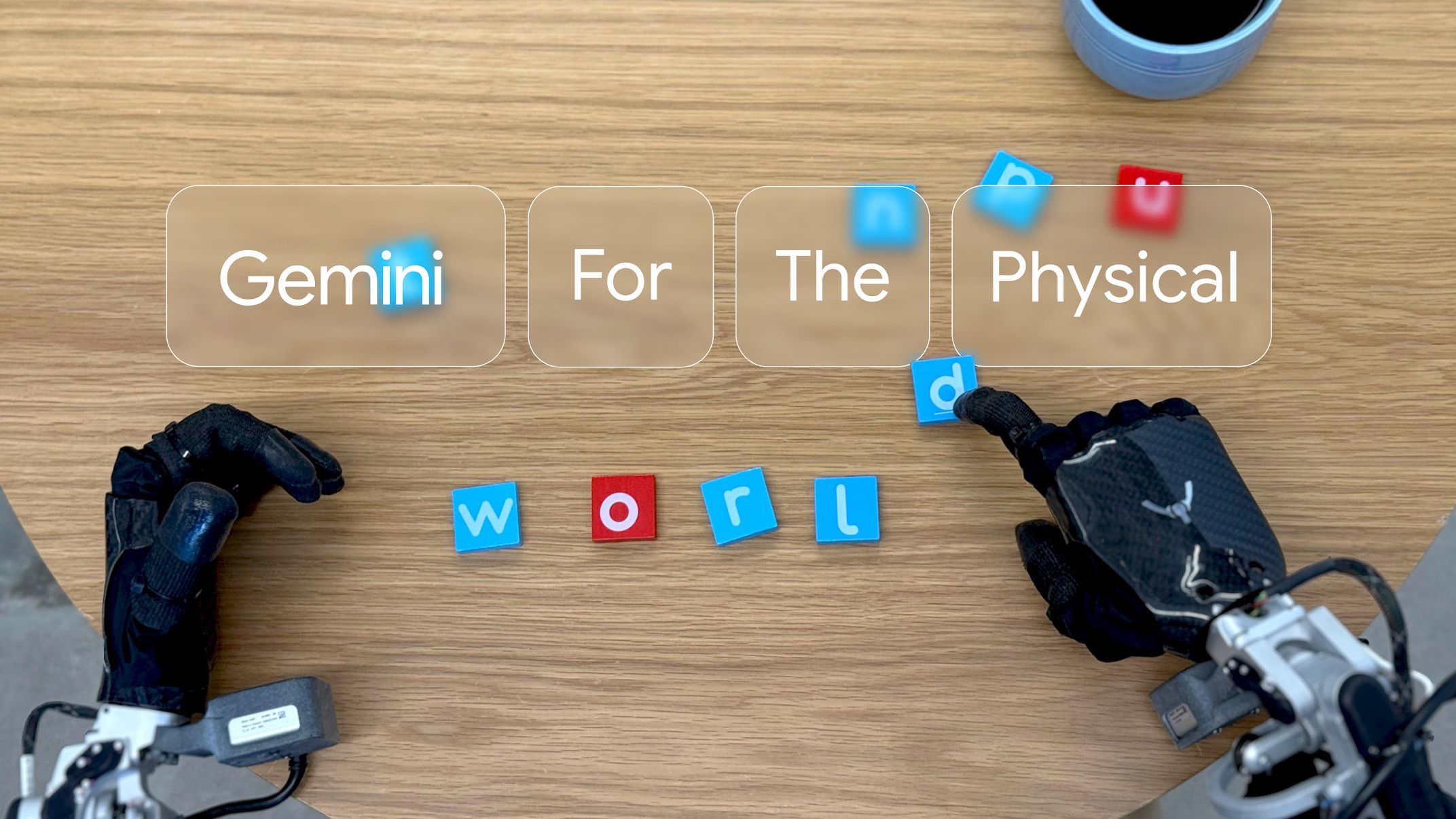

Google has taken significant strides in artificial intelligence by unveiling Gemini Robotics and Gemini Robotics-ER. These models represent the next step in blending physical actions with AI capabilities, based on the powerful Gemini 2.0 framework. The initiative aims to create robots that can not only perform programmed tasks but also interact intelligently with their environment.

Understanding the AI Models

Gemini Robotics

The foundational model, Gemini Robotics, introduces a system that enables robotic devices to accomplish physical actions. This new capability allows robots to recognize their surroundings and perform tasks that require interaction with physical objects. For instance, a robot could pick up an item or navigate through space based on input from its sensors.

Gemini Robotics-ER

The more advanced variant, Gemini Robotics-ER, includes features such as embodied reasoning and advanced spatial awareness. This model enables developers to implement their own programs, enhancing the robot’s ability to carry out complex tasks. By integrating capabilities from Gemini 2.0, these robots can adapt and respond to various environments, making them more versatile for real-world applications.

Project Astra: Enhancing AI Interaction

Project Astra is another critical component of Google’s vision for AI. It acts as an experimental assistant that utilizes device cameras and microphones to react in real-time. For example, during a video call, if a user shows a water bottle, Project Astra can recognize and respond accordingly. This interaction creates a more immersive experience, moving beyond traditional AI text responses to a more tactile and engaging form of communication.

CEO Sundar Pichai’s Insights

Google CEO Sundar Pichai expressed his excitement about these developments. He noted that the Gemini Robotics models have the potential to leverage Gemini’s multimodal understanding of the world. This means robots can use various forms of data—such as visual and spatial information—to make real-time decisions and adapt to changing situations effectively.

The Future of AI Assistants

With the introduction of Gemini Robotics in conjunction with Project Astra, Google is poised to redefine the capabilities of AI assistants. As they evolve, these models could transform how we interact with technology in our daily lives. The combination of physical actions and real-time responses marks a significant shift in AI’s role from merely responding to commands to actively engaging with the physical world.

Looking Ahead: Upcoming Developments

As Google continues to innovate in this space, we can expect further announcements and demonstrations, especially in platforms like Google I/O in 2025. The advancements in Gemini Robotics and Project Astra indicate a potential future where AI assistants can significantly enhance everyday tasks through their capability to understand and respond to the physical world.