Meta: AI Contributed to Under 1% of Election Misinformation on Our Platforms

AI and Misinformation in Recent Elections

In a year that has witnessed approximately 2 billion voters participating in significant elections worldwide, a recent study by Meta indicates that the anticipated threat of AI-generated misinformation did not materialize as dramatically as some experts had believed.

Overview of Meta’s Findings

Meta, a company heavily invested in artificial intelligence and data infrastructure, tracked trends across its platforms during pivotal elections in various countries, including the United States, Bangladesh, Indonesia, India, Pakistan, the European Union Parliament, France, the United Kingdom, South Africa, Mexico, and Brazil. The study revealed that the risks associated with political deepfakes and AI-driven disinformation were minimal. According to Meta’s blog post, content generated by AI concerning elections and politics accounted for less than 1% of all fact-checked misinformation.

Instances of AI Misuse

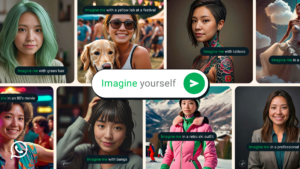

While Meta acknowledged some verified and suspected cases of AI being utilized to spread false information, the company emphasized that existing policies effectively curtailed these efforts. For example, in the month leading up to the US presidential election, Meta’s Imagine AI image generator blocked around 590,000 requests aimed at creating deepfakes of key political figures, including President-elect Trump, Vice President-elect Vance, and President Biden.

Preventing Foreign Interference

Additionally, Meta reported that around 20 foreign interference incidents targeting electoral processes were successfully countered this year. The most notable threat came from Russia, followed by Iran, which conducted interference operations aimed at influencing election outcomes.

The Challenge of Misinformation on Other Platforms

Although Meta took steps to eliminate fake election-related content, some of these videos found their way back onto platforms like X (formerly Twitter) and Telegram. Meta pointed out that these competing platforms have fewer protective measures in place. Recent scrutiny has highlighted how X’s AI, Grok, has been implicated in promoting political misinformation, while Telegram has faced criticism for its inadequate response to the proliferation of harmful content.

The Political Landscape

This study from Meta comes at a time when its CEO, Mark Zuckerberg, is contemplating the future landscape of technology policy in the United States under the incoming administration of President-elect Trump. Though Trump has openly criticized Facebook in the past, there have been signs of a warming relationship, as he was spotted dining with Zuckerberg recently, hinting at a potential shift in dynamics between tech leaders and government officials.

Summary of Key Points

- Voter Participation: Approximately 2 billion people voted across various significant elections.

- AI Misinformation: Only a small fraction (less than 1%) of fact-checked misinformation was attributed to AI.

- Deepfake Blockage: Meta’s technology prevented nearly 590,000 deepfake requests during the US presidential election.

- Foreign Interference: Meta successfully thwarted about 20 foreign operations, primarily from Russia and Iran.

- Platform Safeguards: Other platforms like X and Telegram have faced challenges regarding the moderation of misinformation.

As the debate about AI’s role in spreading misinformation continues, this analysis provides a glimpse into the current landscape and the effectiveness of measures in place to combat the use of technology for malfeasance during elections.