Meta Manipulated AI Benchmarks, Signaling a New Era of Innovation

Meta’s Controversial AI Benchmark: What Happened?

Recently, discussions have erupted over Meta’s claims surrounding their latest AI models, specifically regarding potential manipulation of benchmark scores. This incident has sparked curiosity and laughter in the tech community, notably highlighted by Kylie Robison at The Verge.

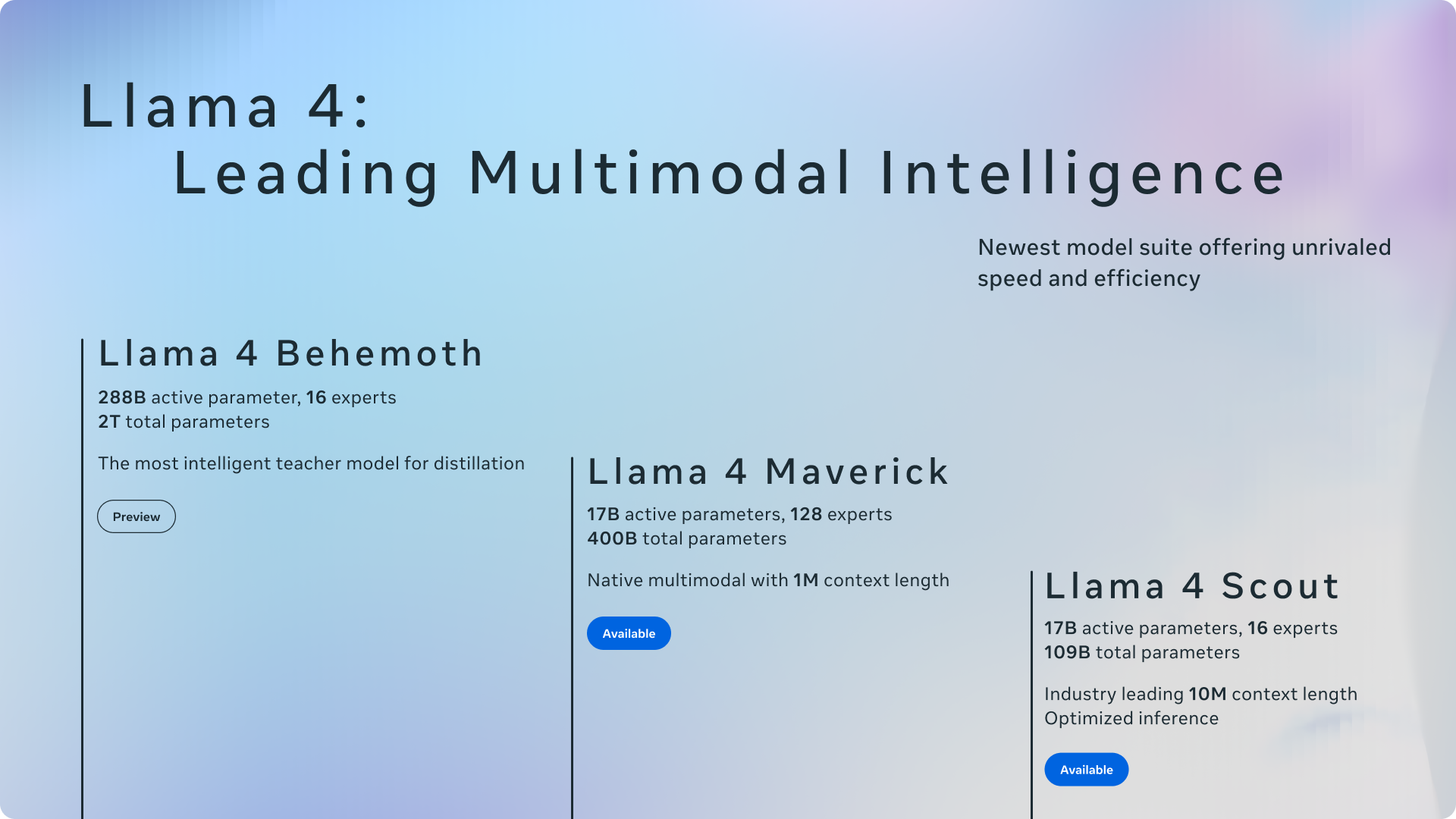

New AI Models from Meta

Meta introduced two new models based on its Llama 4 architecture: Scout and Maverick. Scout is designed for quick queries, while Maverick aims to compete with top-tier models like OpenAI’s GPT-4. As is typical for AI companies launching major updates, Meta’s announcement was accompanied by a flood of technical jargon and data showcasing how their models outperformed those from more established tech firms, including Google and Anthropic.

Maverick’s Impressive Benchmark Score

One particular score in Meta’s announcement caught the attention of AI enthusiasts: Maverick achieved an impressive ELO score of 1417 on LMArena. This platform enables users to vote on the best outputs from different models, with higher scores indicating better performance. Maverick’s score placed it second overall on the leaderboard, right behind Gemini 2.5 Pro and above GPT-4. As you might imagine, this sparked a wave of interest and surprise across the AI landscape.

Digging Deeper: The Fine Print

However, a closer examination revealed something crucial. Meta disclosed that the version of Maverick that attained this stellar score was not the standard model available to users. Instead, it had been tailored to be more engaging in conversation, essentially gaming the benchmarking process. This characteristic was not immediately clear to everyone, leading to some confusion.

Response from LMArena

LMArena did not take kindly to this revelation. They released a statement asserting that Meta’s explanation did not align with their expectations for model providers. The organization emphasized that Meta should have been more transparent about their experimental version, which significantly optimizes for user preferences. In light of this incident, LMArena announced plans to update their leaderboard policies to ensure future clarity and fair evaluations.

A Pattern in Consumer Technology

This situation is reminiscent of behaviors seen in various corners of consumer technology. Throughout my decade-long experience writing about tech products and overseeing benchmarking labs, I’ve observed numerous companies employing various strategies to enhance their performance scores. From adjusting display brightness to shipping cleaner versions of products to reviewers, it’s not uncommon for manufacturers to seek clever ways to exhibit superior performance.

The Future of AI Benchmarking

As competition intensifies among AI developers, such tactics may be on the rise. Currently, companies are eager to set their large language models apart from the pack. Given that many models offer relatively similar functions—such as assisting with essay writing—having an edge in energy efficiency or speed of response can be a valuable marketing tool. A slight increase in performance, even by a few percentage points, can mean the difference for consumers when making choices.

Evolving User Interfaces and Product Features

As these AI tools evolve and become more consumer-friendly, significant changes in user interfaces and features may occur. With platforms like the ChatGPT app exploring unique sections for different tasks, it’s clear that companies are considering various ways to highlight their advantages. Ultimately, having a chatty model may not suffice for a standout reputation if other factors come into play.

Closing Thoughts

The evolving landscape of AI and its benchmarking practices reveals the lengths to which companies will go to secure a competitive edge. As these technologies mature and integrate deeper into everyday products, the community can anticipate ongoing discussions and scrutiny surrounding their performance claims.