Scalable Memory Layers by Meta AI: Advancing Efficiency and Performance in Artificial Intelligence

Artificial Intelligence and the Memory Bottleneck Challenge

Artificial Intelligence (AI) is advancing more rapidly than ever, with large-scale models achieving remarkable intelligence and functionality. Models like GPT-4 and LLaMA represent the cutting edge of technology by processing massive datasets, producing human-like text, aiding decision-making, and boosting automation across various sectors. However, as these models grow more powerful, a significant challenge arises: how to scale them efficiently without facing performance and memory constraints.

The Shift from Dense Layers

Deep learning has traditionally relied on dense layers, where every neuron in one layer is connected to every neuron in the next. This connectivity enables the discovery of complex patterns, but it has a downside. As models increase in size, the number of parameters increases exponentially. This results in higher memory needs, longer training periods, and elevated energy consumption. AI research labs find themselves investing heavily in high-performance hardware to meet these demands.

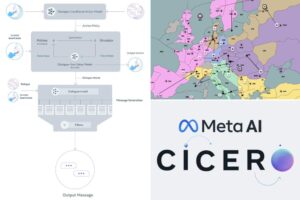

Meta AI’s Solution: Scalable Memory Layers (SMLs)

Meta AI is tackling the scaling challenge by introducing Scalable Memory Layers (SMLs). This innovative approach addresses the inefficiencies of dense layers. Instead of locking all learned information into fixed-weight parameters, SMLs incorporate an external memory system, which retrieves necessary information as needed. This separation between computation and memory reduces the overall workload, leading to improved scalability without the need for extensive hardware resources.

Benefits of SMLs

- Efficiency in Training and Inference: SMLs enhance both training and inference processes, making them more cost-effective and efficient.

- Dynamic Information Updates: Unlike traditional models that require retraining for updates, SMLs allow for dynamic adjustments, which facilitate continuous learning.

- Reduced Computational Overhead: By minimizing unnecessary computations, SMLs free up processing power for other tasks, enhancing overall performance.

Understanding the Limitations of Dense Layers

How Dense Layers Function

Dense layers operate on the principle that every neuron connects to the next layer’s neurons, enabling complex relationships between inputs to be captured effectively. This framework is especially crucial in tasks such as image and speech recognition, as well as natural language understanding. During training, models adjust the weights of these connections to improve accuracy, but as models become larger, inefficiencies begin to emerge.

The Challenges of Scaling with Dense Layers

One major issue with dense layers is their memory inefficiency. As model size increases, the number of parameters grows quadratically, leading to significant memory and computational demands. Consequently, larger models require vastly more RAM and processing power, escalating training costs and inference times.

Moreover, dense layers are prone to redundant computations. Even if certain neurons are less active, they still process data unnecessarily, eating up processing power. This inefficiency results in sluggish inference speeds and deteriorates resource utilization. The models’ inability to adapt in real-time also poses a challenge, necessitating full retraining to incorporate small knowledge adjustments, a process that can be costly and impractical.

How Scalable Memory Layers Optimize AI Knowledge Management

SMLs by Meta AI represent a substantial improvement in deep learning techniques. They use an external memory system to handle knowledge storage more effectively. This method allows models to retrieve information dynamically, minimizing unnecessary computations and making AI systems more adaptable and scalable.

A core feature of SMLs is a trainable key-value lookup system. This allows the models to expand their knowledge without overwhelming computational demands. Traditional architectures grow increasingly resource-intensive as they scale, but SMLs enhance efficiency by enabling selective memory activation, resulting in faster training cycles and lower latency.

Performance Boosts with SMLs

- Enhanced Memory Usage: SMLs decouple knowledge storage from computation, allowing for more efficient memory management.

- Faster Training and Inference: By activating only relevant information, SMLs significantly reduce processing time compared to dense layers.

- Unlimited Scalability: SMLs maintain consistent compute costs regardless of how much knowledge they hold, which makes them suitable for large-scale applications.

- Cost and Energy Savings: The SML architecture translates to lower operational costs because of reduced reliance on expensive hardware.

Comparing Performance: SMLs vs. Dense Layers

The differences in performance between scalable memory layers and traditional dense layers can be summarized as follows:

Memory Efficiency

Dense layers often lead to significant memory constraints, while SMLs optimize memory usage by separating knowledge storage from computation.

Speed

Dense layers can slow down due to redundant computations; SMLs enhance speed by retrieving only the relevant data.

Scalability

While dense layers require additional hardware to manage growth, SMLs keep costs predictable and manageable.

Cost-Effectiveness

Overall, SMLs represent a more efficient and cost-effective alternative to traditional dense architectures, with promising implications for the future of AI.