The Emerging AI Landscape: Google’s 80% Cost Advantage Compared to OpenAI’s Ecosystem

Subscribe to our daily and weekly newsletters for the latest news and insights into the evolving world of generative AI. Discover more

The rapid advancements in generative AI are reshaping the technology landscape. Recently, OpenAI introduced its cutting-edge reasoning models, o3 and o4-mini, along with the GPT-4.1 series. In response, Google launched its Gemini 2.5 Flash, which builds upon the previously released Gemini 2.5 Pro. For technical leaders in enterprises, navigating through this fast-paced evolution involves much more than merely comparing model performance.

1. The Financial Mechanics: Google’s Advantage with Custom TPUs vs. OpenAI’s Nvidia Reliance

One of the most crucial, yet often overlooked, elements in this competition is the economic model behind these AI platforms. Google has spent the last decade developing its own Tensor Processing Units (TPUs), while OpenAI heavily relies on Nvidia’s GPUs. This reliance comes with significant costs.

The Nvidia GPUs, such as the H100 and A100, come with high price tags and substantial gross margins, estimated to be about 80%. This situation creates a “Nvidia tax” for OpenAI because it benefits from Microsoft Azure’s support, but incurs higher costs. In contrast, Google’s ability to manufacture TPUs allows it to avoid these high expenses, who may obtain computing power at approximately 20% of what competitors using Nvidia would pay.

The implications of this cost disparity are palpable in API pricing. For instance, OpenAI’s o3 model is considerably more expensive compared to Google’s Gemini 2.5 Pro. Such financial comparisons highlight that Google can sustain lower pricing, which translates into predictable total cost of ownership for enterprises.

2. Framework for AI Agents: Google’s Versatile Strategy vs. OpenAI’s Integrated Approach

The strategies for creating AI agents also differ significantly between Google and OpenAI. Google is advocating for an open and interoperable system. Recently, at Cloud Next, it introduced the Agent-to-Agent (A2A) protocol. This allows different AI agents to communicate, enhancing collaboration across platforms.

On the other hand, OpenAI is focusing on creating highly capable agents that work seamlessly within its ecosystem. Their new o3 model enables multiple tool uses in a single reasoning sequence. While this integrated approach aims for maximum performance, it may lack the flexibility that some enterprises seek.

- What this means for businesses: Enterprises looking for versatility might prefer Google’s open platform. Conversely, those already invested in Microsoft’s ecosystem may find OpenAI’s setup more efficient.

3. Comparing Model Capabilities: Strengths and Limitations

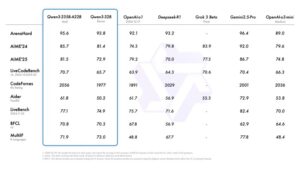

The race for leading AI models is ongoing, with OpenAI’s o3 currently outperforming Google’s Gemini 2.5 Pro in some coding tasks. However, Gemini holds the edge in other tasks and is recognized for its strong performance in the overall large language model arena.

Despite these differences, many core functionalities are nearly equal between the two. What sets them apart are their trade-offs:

- Context and Reasoning: Gemini 2.5 Pro supports large contexts of up to 1 million tokens, making it suitable for comprehensive data analysis. In contrast, o3 emphasizes in-depth reasoning using a smaller context window.

- Reliability versus Innovation: While o3 displays remarkable reasoning capabilities, it has been reported to produce more hallucinations compared to Gemini. Users may find Gemini to be a more reliable choice for crucial enterprise applications.

- For enterprises: Selecting between these models will depend on the specific tasks at hand. For processing large datasets, Gemini is superior, while o3 excels in scenarios demanding advanced reasoning.

4. Customer Integration and Distribution: Google’s Depth vs. OpenAI’s Reach

Ultimately, how easily a platform integrates into an organization’s current infrastructure is critical. Google’s offerings, including Gemini models and tools, provide a cohesive experience for existing Google Cloud customers, presenting a unified environment for their data and AI tools.

On the flip side, OpenAI’s partnership with Microsoft affords it remarkable reach. With the widespread availability of ChatGPT and its integration into Microsoft 365, organizations using these tools find it easier to adopt OpenAI’s solutions.

- Key takeaway: Businesses already committed to Google’s ecosystem may see great value in its integrated offerings, while those within Microsoft’s domain might prefer the accessibility of OpenAI’s services.

Comparing Google and OpenAI/Microsoft: Distinct Choices for Enterprises

The ongoing competition between Google and OpenAI/Microsoft is far more intricate than comparing singular AI models. The various strategies for deploying agent frameworks, the trade-offs in model capabilities, and the practical details of integration all play significant roles in enterprise decisions. At the heart of these considerations is the crucial aspect of compute costs, which will likely shape future decisions for businesses. Google’s cost-effective TPU strategy could prove decisive if OpenAI continues to grapple with the economic challenges posed by Nvidia’s GPU fees.