WHAMM! Interactive Environments with Real-Time World Modeling.

Interactive Gameplay Experience with WHAMM

We’re excited to announce a new interactive gameplay experience at Copilot Labs. You can now enjoy a real-time AI-rendered version of Quake II, powered by Muse. If you’re interested in trying it out, simply visit this link.

To see a glimpse of the gameplay, take a look at these video clips showcasing the Quake II environment generated in real time. Each video illustrates how the WHAMM model responds to user inputs from a controller.

What is Muse and WHAMM?

Muse represents Microsoft’s suite of world models for video games. Following the introduction of Muse in February and the World and Human Action Model (WHAM) detailed in a recent Nature article, we are now unveiling WHAMM. This stands for World and Human Action MaskGIT Model, a fun, intentionally quirky name designed to be engaging.

WHAMM enhances the responsiveness of gameplay significantly compared to its predecessor, WHAM-1.6B, enabling visuals to be generated at over 10 frames per second. This allows players to interact with the AI model in real-time, as their actions are immediately reflected in the game environment.

Improvements in Real-time Gameplay

Since the initial release of WHAM-1.6B, several improvements have been made:

- Faster Generation: WHAMM creates images rapidly, reaching over 10 frames per second, unlike WHAM-1.6B, which produced around one frame per second.

- Broader Game Application: WHAMM successfully transitioned to Quake II, a faster-paced first-person shooter, allowing for different gameplay dynamics.

- Data Efficiency: Training data was greatly reduced, with only one week of gameplay required for WHAMM, compared to seven years for WHAM-1.6B. This efficiency was achieved through focused data collection with professional testers.

- Increased Resolution: WHAMM provides a higher output resolution of 640×360, up from 300×180, resulting in noticeably improved visual quality.

Understanding WHAMM’s Architecture

To foster real-time interaction, WHAMM utilizes a new modeling approach. Unlike the previous autoregressive method, which generated one token at a time, WHAMM’s MaskGIT structure can produce entire image tokens more efficiently. This allows for quicker reactions to player input.

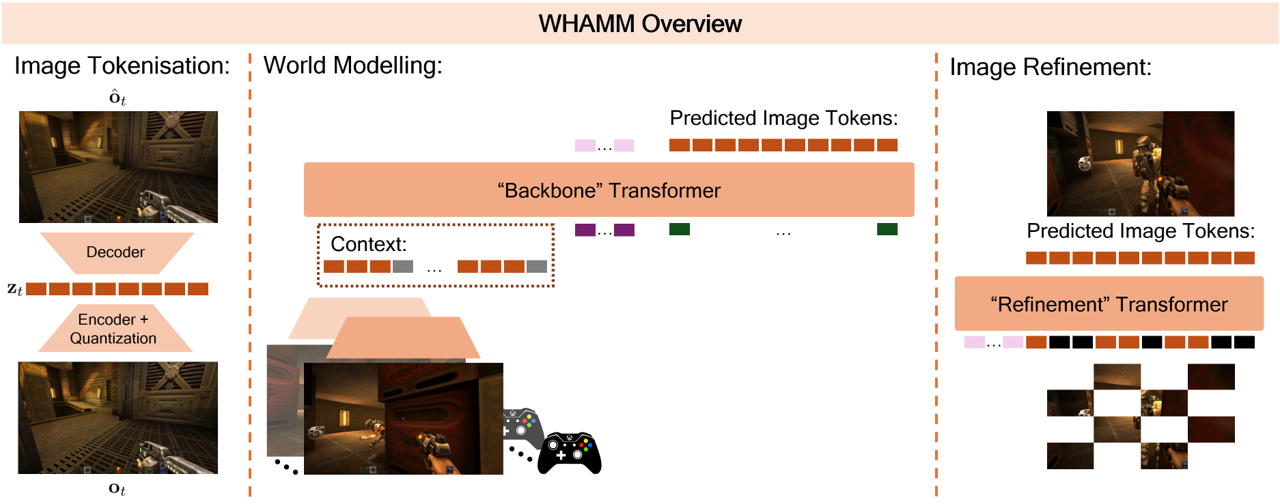

Figure 1: Overview of WHAM architecture, illustrating the tokenization process and training mechanism.

The design consists of two main components: a Backbone transformer, which predicts the tokens for the entire image based on previous actions, and a Refinement transformer that fine-tunes these predictions for better accuracy.

Playing with Quake II WHAMM

Playing the modified Quake II within the WHAMM environment includes the ability to walk around, aim, jump, and interact with various elements similar to the original game. The development team put significant effort into creating this interactive experience, gathering quality data and refining their prototypes to achieve a playable version of the game.

Take a look at this video to witness gameplay, including exploring hidden areas and engaging with the game environment:

Limitations of WHAMM

There are some known limitations with WHAMM as it functions as a generative model, and is designed primarily for research exploration. It does not aim to faithfully replicate the original Quake II experience. Key limitations include:

- Enemy Interactions: Enemy characters often appear unclear, and combat mechanics may not work perfectly.

- Context Length: The model maintains a short context length, impacting object visibility if they go out of view for too long.

- Counting Issues: The health system sometimes fails in accurately reflecting player status.

- Scope Limitation: Currently, WHAMM operates within a single section of Quake II, limiting gameplay depth.

- Latency: There can be noticeable delays in response time, particularly when the model is widely accessed.

Future Developments

The WHAMM model represents an early step into real-time generated gameplay. The development team is enthusiastic about the potential for creating new interactive experiences and enhancing future models to improve upon existing limitations.

Team Contributions

This initiative involved collaborative efforts from teams in Game Intelligence, Xbox Gaming AI, and Xbox Certification. Key roles included:

Model Training: Tabish Rashid, Victor Fragoso, Chuyang Ke.

Data Collection and Infrastructure: Yuhan Cao, Dave Bignell and several others.

Advisory and Leadership: Katja Hofmann and Haiyan Zhang lead the project with valuable guidance.

References

[1] Kanervisto, Anssi et al. “World and Human Action Models towards gameplay ideation.” Nature 638.8051 (2025): 656-663.

[2] Chang, Huiwen et al. “Maskgit: Masked generative image transformer.” IEEE/CVF conference on computer vision and pattern recognition. 2022.

[3] Yu, Jiahui et al. “Vector-quantized Image Modeling with Improved VQGAN.” International Conference on Learning Representations 2022.